Pinterest Empowers Users: New Controls Combat 'AI Slop' in Feeds, Boost Transparency

By: @devadigax

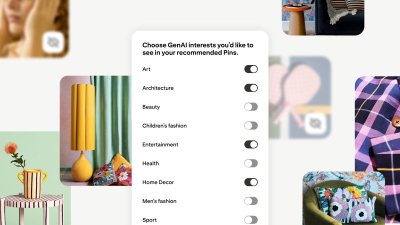

In a significant move reflecting the growing public discourse around artificial intelligence and content authenticity, Pinterest is rolling out new controls designed to give users unprecedented power over the AI-generated content appearing in their feeds. The visual discovery platform announced it will allow users to limit the amount of what many are colloquially calling "AI slop"—a term often used to describe low-quality, generic, or uninspired content generated by AI models—and will also make its AI content labels more prominently visible. This initiative marks a crucial step by a major platform to address user concerns about the proliferation of synthetic media and reaffirms a commitment to a curated, high-quality user experience.

The term "AI slop" has gained traction as generative AI tools, particularly large language models and image generators, have become more accessible. While these tools offer immense creative potential, they also produce a deluge of content that can feel repetitive, bland, or even uncanny. Users across various platforms have voiced fatigue with feeds increasingly populated by content lacking human nuance, originality, or genuine connection. Pinterest, a platform built on inspiration and creativity, recognizes that maintaining the integrity and value of its visual feed is paramount. By introducing these controls, Pinterest is directly responding to a burgeoning user demand for more discerning content consumption.

These new settings will allow users to actively tune their feed preferences, effectively acting as a filter against content identified as AI-generated. While the exact mechanics of how Pinterest's algorithms will identify and categorize "AI-generated content" are not fully detailed, it likely involves a combination of internal detection models, metadata analysis, and potentially even user reporting. This move is not merely about filtering; it's about empowering choice. Users who value human-created content, or who simply want to avoid the potential pitfalls of AI-generated visuals, will now have the agency to tailor their experience. Conversely, users interested in exploring AI-generated art or designs can presumably opt to see more of it, highlighting a flexible approach to content moderation.

Beyond the filtering capabilities, Pinterest is also enhancing the visibility of its AI content labels. Transparency is a cornerstone of responsible AI deployment, and clearly labeling AI-generated content is crucial for informed consumption. In an era where deepfakes and synthetic media can blur the lines between reality and fabrication, knowing whether a piece of content was human-made or machine-generated is vital. More visible labels will help users quickly identify the source of content, fostering greater trust and enabling them to make conscious decisions about what they engage with. This proactive stance on transparency positions Pinterest as a leader in navigating the ethical complexities of generative AI integration.

Pinterest's decision is not an isolated incident but rather indicative of a broader industry shift. As generative AI continues to evolve at a rapid pace, social media platforms, content aggregators, and creative communities are grappling with how to integrate these powerful tools responsibly without compromising user experience or eroding trust. Other platforms have faced similar challenges, from managing AI-generated spam to ensuring proper attribution for AI-assisted creations. Pinterest’s approach could serve as a blueprint for how platforms can offer user-centric controls, allowing individuals to customize their digital environments in an increasingly AI-permeated world.

The implications extend to both creators and consumers. For human creators on Pinterest, this could mean a clearer distinction for their authentic work, potentially increasing its perceived value and visibility among users who opt out of AI-generated content. For AI artists or those using generative tools, it underscores the need for high-quality, distinctive output that transcends the "slop" label, and the importance of engaging with platforms that offer transparent labeling. Ultimately, it encourages a more thoughtful approach to creation, whether human or AI-assisted, pushing for quality over sheer volume.

This initiative also touches upon the ongoing debate about the role of AI in creative industries. While AI can augment human creativity and democratize access to design tools, unchecked proliferation of generic AI content risks devaluing original thought and artistic effort. By giving users the ability to curate their feeds, Pinterest is subtly signaling its support for a balanced ecosystem where both human and AI creativity can coexist, but with user preference as the ultimate arbiter. It acknowledges that while AI is a powerful tool, it should serve human needs and desires, not overwhelm them.

In conclusion, Pinterest's introduction of new controls to limit "AI slop" and enhance label visibility is a forward-thinking response to the evolving digital landscape. It champions user agency, promotes transparency, and sets a precedent for how major platforms can responsibly integrate and manage generative AI content. As AI continues to reshape how we create, consume, and interact with information, platforms that prioritize user choice and content quality will undoubtedly stand out, fostering more engaging, trustworthy, and ultimately, more human digital experiences. This move by Pinterest is a clear indication that user control will be a defining characteristic of the next generation of AI-powered platforms.

The term "AI slop" has gained traction as generative AI tools, particularly large language models and image generators, have become more accessible. While these tools offer immense creative potential, they also produce a deluge of content that can feel repetitive, bland, or even uncanny. Users across various platforms have voiced fatigue with feeds increasingly populated by content lacking human nuance, originality, or genuine connection. Pinterest, a platform built on inspiration and creativity, recognizes that maintaining the integrity and value of its visual feed is paramount. By introducing these controls, Pinterest is directly responding to a burgeoning user demand for more discerning content consumption.

These new settings will allow users to actively tune their feed preferences, effectively acting as a filter against content identified as AI-generated. While the exact mechanics of how Pinterest's algorithms will identify and categorize "AI-generated content" are not fully detailed, it likely involves a combination of internal detection models, metadata analysis, and potentially even user reporting. This move is not merely about filtering; it's about empowering choice. Users who value human-created content, or who simply want to avoid the potential pitfalls of AI-generated visuals, will now have the agency to tailor their experience. Conversely, users interested in exploring AI-generated art or designs can presumably opt to see more of it, highlighting a flexible approach to content moderation.

Beyond the filtering capabilities, Pinterest is also enhancing the visibility of its AI content labels. Transparency is a cornerstone of responsible AI deployment, and clearly labeling AI-generated content is crucial for informed consumption. In an era where deepfakes and synthetic media can blur the lines between reality and fabrication, knowing whether a piece of content was human-made or machine-generated is vital. More visible labels will help users quickly identify the source of content, fostering greater trust and enabling them to make conscious decisions about what they engage with. This proactive stance on transparency positions Pinterest as a leader in navigating the ethical complexities of generative AI integration.

Pinterest's decision is not an isolated incident but rather indicative of a broader industry shift. As generative AI continues to evolve at a rapid pace, social media platforms, content aggregators, and creative communities are grappling with how to integrate these powerful tools responsibly without compromising user experience or eroding trust. Other platforms have faced similar challenges, from managing AI-generated spam to ensuring proper attribution for AI-assisted creations. Pinterest’s approach could serve as a blueprint for how platforms can offer user-centric controls, allowing individuals to customize their digital environments in an increasingly AI-permeated world.

The implications extend to both creators and consumers. For human creators on Pinterest, this could mean a clearer distinction for their authentic work, potentially increasing its perceived value and visibility among users who opt out of AI-generated content. For AI artists or those using generative tools, it underscores the need for high-quality, distinctive output that transcends the "slop" label, and the importance of engaging with platforms that offer transparent labeling. Ultimately, it encourages a more thoughtful approach to creation, whether human or AI-assisted, pushing for quality over sheer volume.

This initiative also touches upon the ongoing debate about the role of AI in creative industries. While AI can augment human creativity and democratize access to design tools, unchecked proliferation of generic AI content risks devaluing original thought and artistic effort. By giving users the ability to curate their feeds, Pinterest is subtly signaling its support for a balanced ecosystem where both human and AI creativity can coexist, but with user preference as the ultimate arbiter. It acknowledges that while AI is a powerful tool, it should serve human needs and desires, not overwhelm them.

In conclusion, Pinterest's introduction of new controls to limit "AI slop" and enhance label visibility is a forward-thinking response to the evolving digital landscape. It champions user agency, promotes transparency, and sets a precedent for how major platforms can responsibly integrate and manage generative AI content. As AI continues to reshape how we create, consume, and interact with information, platforms that prioritize user choice and content quality will undoubtedly stand out, fostering more engaging, trustworthy, and ultimately, more human digital experiences. This move by Pinterest is a clear indication that user control will be a defining characteristic of the next generation of AI-powered platforms.

AI Tool Buzz

AI Tool Buzz