Meta's AI Powers Automatic Video Dubbing for Instagram Reels: Expanding Global Reach with Seamless Translation

@devadigax19 Aug 2025

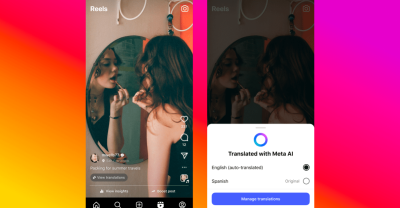

Meta is significantly expanding its AI-powered translation capabilities, bringing automatic video dubbing to Instagram Reels and enhancing its existing Facebook features. This groundbreaking development leverages sophisticated AI to translate video content into multiple languages while seamlessly syncing the dubbed audio with the speaker's lip movements and vocal tone. This marks a significant leap forward in accessibility and global reach for content creators and businesses alike.

The new feature goes beyond simple subtitle generation. It uses advanced AI models to analyze the original audio and video, generating a dubbed audio track in the target language that accurately matches the lip movements of the speaker. This level of synchronization is crucial for creating a natural and engaging viewing experience, avoiding the jarring discrepancies often seen in less sophisticated translation efforts. This sophisticated process involves not just translation but also voice synthesis tailored to mimic the original speaker's voice characteristics as closely as possible. The result is a significantly improved viewer experience compared to simpler solutions that rely solely on subtitles or poorly synced dubbing.

Currently, the rollout is phased, meaning it's not immediately available to all users. Meta is likely employing a gradual release strategy to monitor performance and address any unforeseen issues before making it universally accessible. This approach is standard practice in the tech industry to minimize disruptions and ensure the new feature functions as intended for a large user base. It allows Meta to gather user feedback and make necessary adjustments before a full-scale launch, avoiding potentially negative impacts on user experience.

This move aligns perfectly with Meta's broader strategy to foster global communication and bridge language barriers. The company has been investing heavily in AI research and development, with a particular focus on natural language processing (NLP) and machine translation. This automatic video dubbing feature represents a major milestone in this ongoing effort. By making video content easily accessible to a wider global audience, Meta aims to increase user engagement, enhance content discoverability, and ultimately, boost the platform's overall reach and influence.

The implications for content creators are substantial. Previously, creators aiming for international audiences faced significant logistical and financial hurdles in creating multiple language versions of their videos. This involved either hiring professional translators and voice actors for each language or relying on less effective and less professional subtitle solutions. Meta's AI-powered dubbing eliminates these barriers, allowing creators to reach a significantly larger audience with minimal additional effort. This could lead to a substantial increase in content diversity and accessibility, as creators are freed from the constraints of language limitations.

The business implications are equally significant. Businesses using Instagram for marketing and advertising can now leverage this tool to broaden their reach to international markets more easily and cost-effectively. This could lead to significant growth opportunities for businesses seeking to expand their customer base globally. Furthermore, this enhanced accessibility can lead to a more level playing field for smaller businesses and independent creators who may not have the resources to produce multilingual content traditionally.

However, despite the advantages, challenges remain. The accuracy of the AI translation and voice synthesis is crucial. While significant advancements have been made in this area, nuances in language and cultural context can still pose challenges. Meta will need to continuously refine its AI models to improve accuracy and address potential biases that may emerge in the translation process. Moreover, ensuring the ethical implications of AI-generated content, such as potential misuse or the spread of misinformation, will require ongoing monitoring and mitigation strategies.

Looking ahead, we can expect Meta to further develop and refine this AI-powered dubbing feature. We might see improved voice synthesis, support for a broader range of languages, and even more advanced capabilities, such as automatic adaptation of video content to different cultural norms. The future of social media is undoubtedly intertwined with the advancement of AI, and Meta's initiative sets a precedent for other platforms to follow suit, ushering in a new era of effortless global communication through video.

The new feature goes beyond simple subtitle generation. It uses advanced AI models to analyze the original audio and video, generating a dubbed audio track in the target language that accurately matches the lip movements of the speaker. This level of synchronization is crucial for creating a natural and engaging viewing experience, avoiding the jarring discrepancies often seen in less sophisticated translation efforts. This sophisticated process involves not just translation but also voice synthesis tailored to mimic the original speaker's voice characteristics as closely as possible. The result is a significantly improved viewer experience compared to simpler solutions that rely solely on subtitles or poorly synced dubbing.

Currently, the rollout is phased, meaning it's not immediately available to all users. Meta is likely employing a gradual release strategy to monitor performance and address any unforeseen issues before making it universally accessible. This approach is standard practice in the tech industry to minimize disruptions and ensure the new feature functions as intended for a large user base. It allows Meta to gather user feedback and make necessary adjustments before a full-scale launch, avoiding potentially negative impacts on user experience.

This move aligns perfectly with Meta's broader strategy to foster global communication and bridge language barriers. The company has been investing heavily in AI research and development, with a particular focus on natural language processing (NLP) and machine translation. This automatic video dubbing feature represents a major milestone in this ongoing effort. By making video content easily accessible to a wider global audience, Meta aims to increase user engagement, enhance content discoverability, and ultimately, boost the platform's overall reach and influence.

The implications for content creators are substantial. Previously, creators aiming for international audiences faced significant logistical and financial hurdles in creating multiple language versions of their videos. This involved either hiring professional translators and voice actors for each language or relying on less effective and less professional subtitle solutions. Meta's AI-powered dubbing eliminates these barriers, allowing creators to reach a significantly larger audience with minimal additional effort. This could lead to a substantial increase in content diversity and accessibility, as creators are freed from the constraints of language limitations.

The business implications are equally significant. Businesses using Instagram for marketing and advertising can now leverage this tool to broaden their reach to international markets more easily and cost-effectively. This could lead to significant growth opportunities for businesses seeking to expand their customer base globally. Furthermore, this enhanced accessibility can lead to a more level playing field for smaller businesses and independent creators who may not have the resources to produce multilingual content traditionally.

However, despite the advantages, challenges remain. The accuracy of the AI translation and voice synthesis is crucial. While significant advancements have been made in this area, nuances in language and cultural context can still pose challenges. Meta will need to continuously refine its AI models to improve accuracy and address potential biases that may emerge in the translation process. Moreover, ensuring the ethical implications of AI-generated content, such as potential misuse or the spread of misinformation, will require ongoing monitoring and mitigation strategies.

Looking ahead, we can expect Meta to further develop and refine this AI-powered dubbing feature. We might see improved voice synthesis, support for a broader range of languages, and even more advanced capabilities, such as automatic adaptation of video content to different cultural norms. The future of social media is undoubtedly intertwined with the advancement of AI, and Meta's initiative sets a precedent for other platforms to follow suit, ushering in a new era of effortless global communication through video.

AI Tool Buzz

AI Tool Buzz