Meta Boosts Parental Controls for Teen AI Chatbots Amidst Broader Safety Push

By: @devadigax

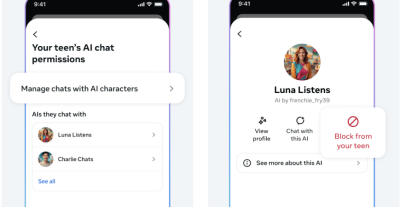

Meta, a titan in the social media and technology landscape, has announced a significant expansion of its parental control features, specifically targeting the interactions between teenagers and its rapidly deployed AI chatbots. This move comes after an aggressive rollout of AI capabilities across its platforms, including Instagram, Messenger, and WhatsApp, and is widely seen as a strategic effort to address growing concerns about youth safety online and to rehabilitate its public image. The new options aim to give parents greater insight into their teens' digital conversations with AI characters and empower them to set crucial limits on usage.

The enthusiastic integration of generative AI into Meta's ecosystem has been a defining characteristic of its recent strategy. From AI-powered assistants that can answer questions and generate content to creative tools that enhance visual storytelling, AI has permeated the user experience. While these innovations promise enhanced engagement and utility, their widespread availability, particularly to a young demographic, has simultaneously raised alarms among parents, educators, and child safety advocates. The rapid pace of AI deployment often outstrips the development of robust safety protocols, creating a reactive rather than proactive cycle of innovation and mitigation.

Details of the new controls, while not fully exhaustive in the initial announcement, are expected to include a suite of tools designed to offer transparency and management. Parents will likely gain access to dashboards that provide summaries of their teens' interactions with AI chatbots, potentially including frequency of use, types of queries, and content categories. Furthermore, the ability to set time limits on AI chatbot engagement or even restrict access to certain AI functionalities will be a critical component, giving guardians direct levers to manage screen time and content exposure. Notifications for parents regarding specific types of AI interactions or changes in usage patterns could also be part of this expanded toolkit, ensuring they remain informed without having to constantly monitor every digital exchange.

This initiative is not merely a technical update; it's a strategic response to a complex challenge. Meta has a well-documented history of facing intense scrutiny over the impact of its platforms on young users, ranging from concerns about mental health effects linked to Instagram to issues surrounding data privacy and exposure to inappropriate content. The company has invested heavily in AI, positioning it as the future of its products. However, to maintain trust and avoid further regulatory backlash, particularly concerning minors, it must demonstrate a clear commitment to responsible AI development and deployment. This latest announcement is a tangible step in that direction, signaling an acknowledgment of the unique risks AI poses, especially to developing minds.

The ethical considerations surrounding AI and youth are multifaceted. One primary concern is the potential for AI chatbots to generate or facilitate access to misinformation, disinformation, or even harmful content. While AI models are trained with safeguards, they are not infallible and can sometimes "hallucinate" or provide biased information. For impressionable teenagers, distinguishing fact from fiction or identifying problematic content can be challenging. Another significant worry is privacy; interactions with AI chatbots, like any digital communication, generate data. Questions arise about how this data is collected, stored, and used, and what implications it has for the privacy of minors.

Beyond content and privacy, there are developmental and psychological impacts to consider. Over-reliance on AI for social interaction or emotional support could potentially hinder the development of crucial human social skills, empathy, and critical thinking. Teens might form unhealthy attachments to AI characters, seeking comfort or advice from a non-sentient entity rather than human peers, family, or mental health professionals. The pervasive nature of AI could also contribute to increased screen time and potential digital addiction, further exacerbated by the engaging and often personalized nature of AI conversations. Safeguarding against these risks requires a multi-pronged approach, and parental controls are a vital first line of defense.

Meta's move also reflects a broader trend within the technology industry, where companies are grappling with the responsible integration of powerful AI tools. Giants like Google, Microsoft, and OpenAI have all faced similar questions regarding AI safety, bias, and the protection of vulnerable users. Developing "responsible AI" frameworks has become a priority, involving significant investments in ethical AI research, safety testing, and the implementation of guardrails. However, the sheer scale and speed of AI innovation mean that these frameworks are constantly evolving, and companies are often playing catch-up.

For parents, the digital landscape presents an ever-evolving challenge. Many struggle to keep pace with new technologies and understand the nuances of their children's online activities. Tools that simplify oversight and provide actionable insights are invaluable. Meta's new controls aim to empower parents, offering them the means to navigate the complexities of AI interaction without necessarily having to become AI experts themselves. This balance between enabling parental guidance and respecting teen autonomy will be crucial for the success and acceptance of these new features.

Ultimately, Meta's expansion of parental controls for teen AI use is a necessary and welcome development. It underscores the growing recognition within the tech industry that innovation must be tempered with responsibility, especially when it concerns the youngest users. While these controls represent a significant step forward, they are part of an ongoing conversation. The future will demand continuous iteration, robust collaboration between tech companies, parents, educators, and policymakers, and an unwavering commitment to prioritizing the safety and well-being of young people as AI continues to reshape our digital world. The journey to truly safe and beneficial AI for all users, particularly adolescents, is far from over, and proactive measures like these are essential to navigating its complex terrain.

The enthusiastic integration of generative AI into Meta's ecosystem has been a defining characteristic of its recent strategy. From AI-powered assistants that can answer questions and generate content to creative tools that enhance visual storytelling, AI has permeated the user experience. While these innovations promise enhanced engagement and utility, their widespread availability, particularly to a young demographic, has simultaneously raised alarms among parents, educators, and child safety advocates. The rapid pace of AI deployment often outstrips the development of robust safety protocols, creating a reactive rather than proactive cycle of innovation and mitigation.

Details of the new controls, while not fully exhaustive in the initial announcement, are expected to include a suite of tools designed to offer transparency and management. Parents will likely gain access to dashboards that provide summaries of their teens' interactions with AI chatbots, potentially including frequency of use, types of queries, and content categories. Furthermore, the ability to set time limits on AI chatbot engagement or even restrict access to certain AI functionalities will be a critical component, giving guardians direct levers to manage screen time and content exposure. Notifications for parents regarding specific types of AI interactions or changes in usage patterns could also be part of this expanded toolkit, ensuring they remain informed without having to constantly monitor every digital exchange.

This initiative is not merely a technical update; it's a strategic response to a complex challenge. Meta has a well-documented history of facing intense scrutiny over the impact of its platforms on young users, ranging from concerns about mental health effects linked to Instagram to issues surrounding data privacy and exposure to inappropriate content. The company has invested heavily in AI, positioning it as the future of its products. However, to maintain trust and avoid further regulatory backlash, particularly concerning minors, it must demonstrate a clear commitment to responsible AI development and deployment. This latest announcement is a tangible step in that direction, signaling an acknowledgment of the unique risks AI poses, especially to developing minds.

The ethical considerations surrounding AI and youth are multifaceted. One primary concern is the potential for AI chatbots to generate or facilitate access to misinformation, disinformation, or even harmful content. While AI models are trained with safeguards, they are not infallible and can sometimes "hallucinate" or provide biased information. For impressionable teenagers, distinguishing fact from fiction or identifying problematic content can be challenging. Another significant worry is privacy; interactions with AI chatbots, like any digital communication, generate data. Questions arise about how this data is collected, stored, and used, and what implications it has for the privacy of minors.

Beyond content and privacy, there are developmental and psychological impacts to consider. Over-reliance on AI for social interaction or emotional support could potentially hinder the development of crucial human social skills, empathy, and critical thinking. Teens might form unhealthy attachments to AI characters, seeking comfort or advice from a non-sentient entity rather than human peers, family, or mental health professionals. The pervasive nature of AI could also contribute to increased screen time and potential digital addiction, further exacerbated by the engaging and often personalized nature of AI conversations. Safeguarding against these risks requires a multi-pronged approach, and parental controls are a vital first line of defense.

Meta's move also reflects a broader trend within the technology industry, where companies are grappling with the responsible integration of powerful AI tools. Giants like Google, Microsoft, and OpenAI have all faced similar questions regarding AI safety, bias, and the protection of vulnerable users. Developing "responsible AI" frameworks has become a priority, involving significant investments in ethical AI research, safety testing, and the implementation of guardrails. However, the sheer scale and speed of AI innovation mean that these frameworks are constantly evolving, and companies are often playing catch-up.

For parents, the digital landscape presents an ever-evolving challenge. Many struggle to keep pace with new technologies and understand the nuances of their children's online activities. Tools that simplify oversight and provide actionable insights are invaluable. Meta's new controls aim to empower parents, offering them the means to navigate the complexities of AI interaction without necessarily having to become AI experts themselves. This balance between enabling parental guidance and respecting teen autonomy will be crucial for the success and acceptance of these new features.

Ultimately, Meta's expansion of parental controls for teen AI use is a necessary and welcome development. It underscores the growing recognition within the tech industry that innovation must be tempered with responsibility, especially when it concerns the youngest users. While these controls represent a significant step forward, they are part of an ongoing conversation. The future will demand continuous iteration, robust collaboration between tech companies, parents, educators, and policymakers, and an unwavering commitment to prioritizing the safety and well-being of young people as AI continues to reshape our digital world. The journey to truly safe and beneficial AI for all users, particularly adolescents, is far from over, and proactive measures like these are essential to navigating its complex terrain.

AI Tool Buzz

AI Tool Buzz