Democratizing AI: How Red Hat Enterprise Linux AI is Breaking Down LLM Barriers

What Makes Red Hat Enterprise Linux AI Different?

Think of Red Hat Enterprise Linux AI as your complete AI toolkit in one package. It's not just another AI platform – it's a thoughtfully assembled foundation that combines enterprise reliability with open source innovation. The platform brings together four key components that work seamlessly together:

The Red Hat AI Inference Server sits at the heart of the platform, delivering consistent performance whether you're running on-premises, in the cloud, or across hybrid environments. What's impressive here is the broad hardware support – whether you're team NVIDIA, Intel, or AMD, the platform has you covered with optimized inference capabilities.

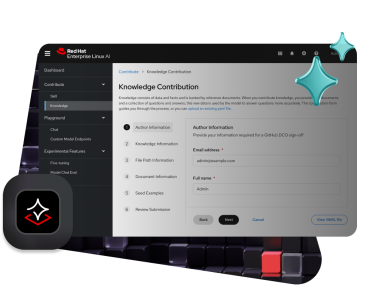

Then there's InstructLab, which might be the most exciting piece of the puzzle. This community-driven model alignment tool opens up LLM development to a much wider audience. Instead of needing a PhD in machine learning and access to massive compute resources, developers can contribute to and customize AI models through a more accessible interface.

The platform also includes a bootable Red Hat Enterprise Linux image that comes pre-loaded with popular AI libraries like PyTorch. This eliminates the often frustrating setup process that can eat up days or weeks of development time. You get hardware-optimized performance right out of the box.

Breaking Down the AI Monopoly

Here's why this matters: generative AI shouldn't be controlled by just a handful of tech giants. When only a few companies control the most powerful AI models, innovation slows down, costs stay high, and entire industries get locked out of the AI revolution.

Red Hat's open source approach flips this script. By making LLM development more accessible and transparent, they're enabling a new wave of AI innovation that comes from the community rather than corporate boardrooms. Small startups can compete with tech giants. Academic researchers can contribute breakthrough improvements. Enterprise teams can customize models for their specific needs without vendor lock-in.

Real-World Impact

The practical implications are significant. Organizations can now deploy AI solutions without worrying about unpredictable licensing costs or being dependent on external API calls for critical business functions. They can evaluate exactly how their AI models work, understand their limitations, and make improvements based on their specific use cases.

For developers, this means shorter development cycles and more control over their AI implementations. Instead of wrestling with compatibility issues or waiting for vendor support, teams can focus on building innovative applications that solve real problems.

The Enterprise Advantage

Red Hat hasn't forgotten about enterprise needs either. The platform includes enterprise-grade technical support and Open Source Assurance legal protections – crucial considerations for businesses deploying AI at scale. This combination of innovation and reliability makes it easier for enterprises to embrace AI without compromising on security, compliance, or support requirements.

Looking Forward

The AI landscape is evolving rapidly, and platforms like Red Hat Enterprise Linux AI represent a crucial shift toward democratization and transparency. As more organizations gain access to powerful AI tools, we're likely to see an explosion of creativity and innovation that benefits everyone.

Whether you're a startup looking to integrate AI into your product, an enterprise seeking to modernize operations, or a developer curious about contributing to the future of AI, Red Hat Enterprise Linux AI offers a path forward that doesn't require choosing between innovation and control.

The future of AI shouldn't be determined by a few tech giants – it should be shaped by the collective creativity and needs of the entire technology community. Red Hat Enterprise Linux AI is making that future possible, one open source contribution at a time.

AI Tool Buzz

AI Tool Buzz