Anthropic's Strategic Shift: Claude Learns 'Skills' to Conquer Workplace Challenges

By: @devadigax

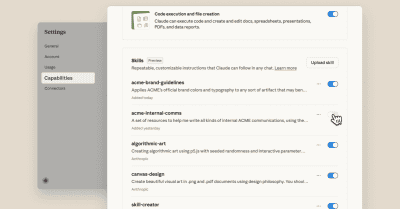

For years, the concept of an artificial intelligence agent — a digital assistant capable of independently performing complex tasks and interacting with various systems — has captivated the tech world. It existed first as a theoretical ideal, then as an intriguing experiment, often demonstrating flashes of brilliance alongside frustrating limitations. Now, the landscape is rapidly shifting, with major AI companies dedicating unprecedented resources to transform these conceptual agents into truly useful tools for professionals and consumers alike. Leading this charge, Anthropic on Thursday announced a significant evolution for its flagship AI model, Claude, by emphasizing the development of practical "skills" designed to enhance its utility in real-world work environments.

This pivot marks a critical juncture in the maturation of AI. Moving beyond merely generating text or answering queries, the focus on "skills" for Claude signifies a deeper ambition: to enable the AI to understand, plan, execute, and iterate on multi-step processes. For an AI, a "skill" isn't just about knowing facts; it's about the capability to perform actions. This includes everything from interacting with external APIs and databases, automating routine software tasks, synthesizing information from disparate sources, to even drafting complex documents and managing elements of project workflows. It’s about operationalizing intelligence, allowing Claude to not just *tell* you how to do something, but to *do* it.

The push towards agentic AI is a natural progression from the large language models (LLMs) that have dominated the conversation in recent years. While LLMs excel at understanding and generating human-like text, they often struggle with the sequential reasoning, tool integration, and persistent memory required for sophisticated task execution. By imbuing Claude with "skills," Anthropic aims to bridge this gap, enabling it to act more autonomously and reliably. Imagine an AI that can not only summarize a lengthy legal brief but also cross-reference it with case law databases, highlight relevant precedents, and then draft a preliminary response, all without constant human oversight. This is the promise of skill-based AI agents.

Anthropic's move is particularly noteworthy given its foundational commitment to AI safety and responsible development through its "Constitutional AI" approach. Applying this framework to agentic capabilities means not just building powerful tools, but building *safe* and *controllable* ones. This translates into agents that can explain their reasoning, adhere to ethical guidelines, and operate within defined boundaries, minimizing the risks of unintended consequences. For businesses considering integrating AI agents into their core operations, this focus on safety and transparency could be a significant differentiator and a key factor in building trust.

The implications for businesses and individual professionals are profound. The introduction of highly skilled AI agents like Claude promises to unlock

This pivot marks a critical juncture in the maturation of AI. Moving beyond merely generating text or answering queries, the focus on "skills" for Claude signifies a deeper ambition: to enable the AI to understand, plan, execute, and iterate on multi-step processes. For an AI, a "skill" isn't just about knowing facts; it's about the capability to perform actions. This includes everything from interacting with external APIs and databases, automating routine software tasks, synthesizing information from disparate sources, to even drafting complex documents and managing elements of project workflows. It’s about operationalizing intelligence, allowing Claude to not just *tell* you how to do something, but to *do* it.

The push towards agentic AI is a natural progression from the large language models (LLMs) that have dominated the conversation in recent years. While LLMs excel at understanding and generating human-like text, they often struggle with the sequential reasoning, tool integration, and persistent memory required for sophisticated task execution. By imbuing Claude with "skills," Anthropic aims to bridge this gap, enabling it to act more autonomously and reliably. Imagine an AI that can not only summarize a lengthy legal brief but also cross-reference it with case law databases, highlight relevant precedents, and then draft a preliminary response, all without constant human oversight. This is the promise of skill-based AI agents.

Anthropic's move is particularly noteworthy given its foundational commitment to AI safety and responsible development through its "Constitutional AI" approach. Applying this framework to agentic capabilities means not just building powerful tools, but building *safe* and *controllable* ones. This translates into agents that can explain their reasoning, adhere to ethical guidelines, and operate within defined boundaries, minimizing the risks of unintended consequences. For businesses considering integrating AI agents into their core operations, this focus on safety and transparency could be a significant differentiator and a key factor in building trust.

The implications for businesses and individual professionals are profound. The introduction of highly skilled AI agents like Claude promises to unlock

AI Tool Buzz

AI Tool Buzz